What is an LLM Chatbot and How to Build One?

- November 21, 2024

- 12 mins read

- Listen

Table of Content

What if your chatbot speaks every language your audience does, generates leads while you sleep, and engages with customers as naturally as a human? Businesses need smarter, and more efficient ways to connect with customers. LLM chatbots are the game-changing AI technology redefining how businesses interact with their customers.

In this blog, you’ll learn why a business should use an LLM Chatbot, its difference from a traditional chatbot, and how to build an LLM chatbot for your businesses to deliver efficient, scalable support.

What is an LLM Chatbot?

A large language model (LLM) is an AI system trained on vast text or other types of data to understand context, generate human-like responses, and effectively process complex and multilingual interactions.

An LLM chatbot is an advanced AI technology powered by large language models designed to understand and generate human-like responses in multiple languages. It helps businesses offer instant, accurate support, even for complex queries, without needing constant human intervention.

For instance, the REVE Chat powered by LLM seamlessly manages high volumes of customer inquiries across various languages, operating 24/7 to reduce wait times, lighten the workload on support teams, and enhance the overall customer experience.

What Makes an LLM Chatbot Different From a Traditional Chatbot?

Unlike traditional chatbots, which rely on predefined scripts and struggle with multilingual support and maintaining fluid conversations, LLM chatbots excel at generating natural, context-aware responses, effortlessly adapting to various languages. They can handle complex inquiries, understand nuances, and provide more personalized, human-like interactions.

LLM chatbots leverage transformer architectures, such as GPT or LLaMA, which enable them to process vast amounts of unstructured text data. These models use attention mechanisms to understand and generate language based on context, allowing them to switch seamlessly between different topics, provide relevant responses, and personalize interactions.

Building such a chatbot requires large-scale pre-trained models, cloud-based infrastructure (e.g., AWS or Google Cloud) for scalability, and fine-tuning capabilities like those offered by Hugging Face. Integration with external systems like CRMs, ticketing platforms, or knowledge bases ensures the chatbot can deliver accurate, domain-specific support and function as part of a broader business ecosystem.

How Does an LLM Chatbot Work?

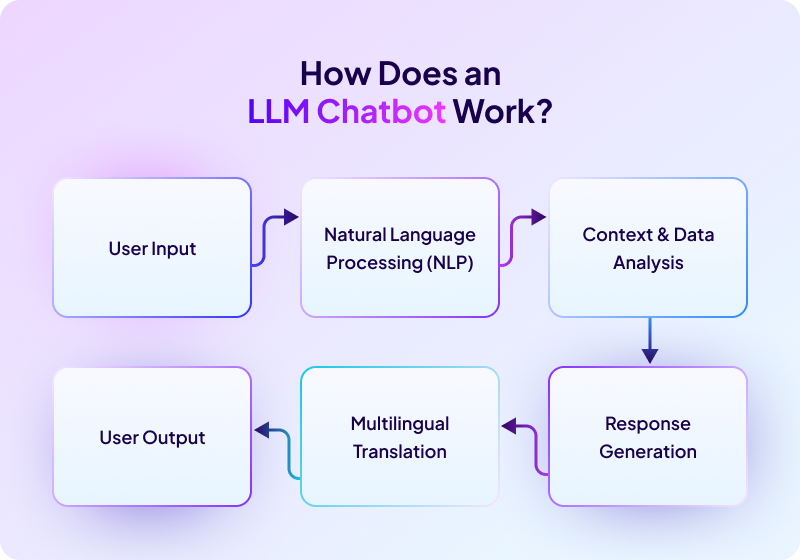

An LLM Chatbot for customer experience operates through a series of sophisticated steps that enable it to understand, process, and respond to user queries with a conversational, human-like quality.

Here’s a breakdown of the process:

The process begins when a user sends a query, whether in text or voice format, which may be in different languages or contain regional nuances. The chatbot then uses Natural Language Processing (NLP) to analyze the input, breaking it down to identify intent, context, and sentiment. This allows the chatbot to understand not just what the user is asking, but also the tone of the message, ensuring a more accurate response.

Next, the chatbot leverages Large Language Models (LLMs) to pull from relevant training data and past interactions, adding context and refining the response. Using advanced AI models like transformers, it generates a natural, context-aware reply tailored to the user’s query. If needed, the chatbot can translate the response into multiple languages for diverse customer bases, ensuring seamless support. The chatbot then delivers the response instantly, often offering follow-up options or connecting the user to a live agent if required

Why Should a Business Consider Using LLM Chatbot?

By effectively using LLM chatbots, businesses can improve customer satisfaction, simplify operations, and establish themselves as reliable, customer-focused service providers. Now let’s find the top reasons why you should use an LLM chatbot for your business.

1. Personalized Product Recommendations

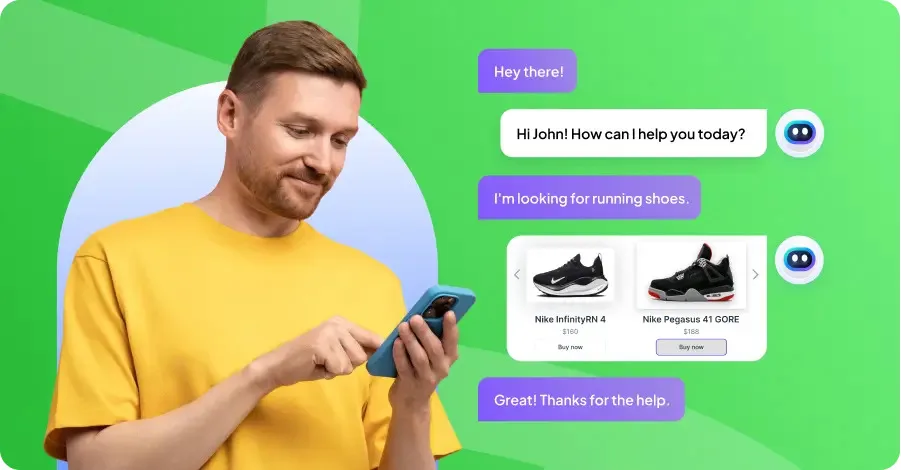

Using an LLM chatbot to deliver personalized product recommendations helps businesses drive sales, improve customer engagement, and enhance user experience. Through advanced natural language processing and data analysis, these chatbots can analyze customer behavior, purchase history, and preferences to suggest products tailored to individual needs.

According to McKinsey, personalization can increase sales by up to 10%, and 80% of customers are more likely to purchase from brands that offer personalized experiences.

In an e-commerce setting, for example, an LLM chatbot might recommend items based on a user’s browsing history or complementary products based on a recent purchase. This not only improves conversion rates but also enhances customer loyalty, as customers feel valued and understood.

In this case, businesses can maximize revenue from existing traffic while building a more satisfying shopping experience by delivering relevant recommendations.

2. Real-Time Order Tracking and Updates

Customers expect transparency when it comes to their orders. Providing real-time tracking updates has become a standard expectation in e-commerce. LLM chatbots excel at managing these inquiries by instantly retrieving and sharing order status updates, estimated delivery times, and any delays.

A study by MetaPack found that 96% of customers believe that delivery performance affects brand loyalty, highlighting the importance of real-time tracking.

Through an LLM chatbot, businesses can automate tracking updates, reduce pressure on customer service teams, and keep customers informed without delay. This convenience boosts customer satisfaction, reduces follow-up inquiries, and fosters trust.

For instance, a telecom business can use this feature to update customers on the status of equipment deliveries or service installations ensuring they feel engaged and informed.

3. Human Like Conversation

One of the standout features of an LLM chatbot is its ability to hold human-like conversations. By understanding context, tone, and intent, it responds in a natural, engaging manner that mimics human interaction. This capability builds trust and rapport with customers, making them feel heard and valued.

Businesses can use this human-like communication to nurture leads more effectively. And, they can guide users through decision-making processes, and create personalized experiences. While seamlessly switching between multiple languages to cater to a diverse audience.

4. Appointment Scheduling and Reminders

Industries such as BFSI and healthcare often require customers to schedule appointments for consultations or check-ins. LLM chatbots can facilitate this process by allowing customers to book appointments, reschedule as needed, and receive automated reminders.

Studies have shown that appointment reminders can decrease no-show rates by up to 30%, which is crucial for industries reliant on scheduled interactions.

In addition to improving efficiency, automated scheduling enhances the customer experience by providing a quick, self-service option for appointment management. For example, a bank could use an LLM Chatbot for customer service to help schedule in-branch consultations with financial advisors.

It reduces the need for manual booking systems and enhances convenience. This capability not only saves time for customers but also ensures businesses optimize their schedules.

5. Proactive Issue Resolution

Unlike traditional chatbots that wait for customers to initiate interactions, LLM chatbots can analyze past interactions, user behavior, or system data to anticipate customer needs and offer solutions in advance.

For example, if a customer repeatedly asks about product delivery timelines, the chatbot might proactively notify them of shipment updates or delays. It reduces the need for the customer to follow up.

These chatbots also monitor patterns in customer queries and system activity to predict common issues.

6. 24/7 Customer Service Availability

Customer needs can arise at any hour—whether it’s a banking emergency, a question about a product, or a technical telecom issue. However, maintaining a 24/7 human support team can be costly and logistically challenging. LLM Chatbots offer an effective solution by being “always on,” providing instant, intelligent assistance around the clock.

While traditional chatbots can also offer 24/7 support, LLM chatbots take it a step further by delivering context-aware, natural conversations that adapt to the complexity of customer queries. Unlike rule-based chatbots, which rely on fixed scripts and often struggle with nuanced or unexpected questions, LLM chatbots leverage advanced AI and language models to understand and respond to a wide variety of inquiries with greater accuracy and personalization. This makes them not just responsive, but also proactive in providing relevant, tailored support.

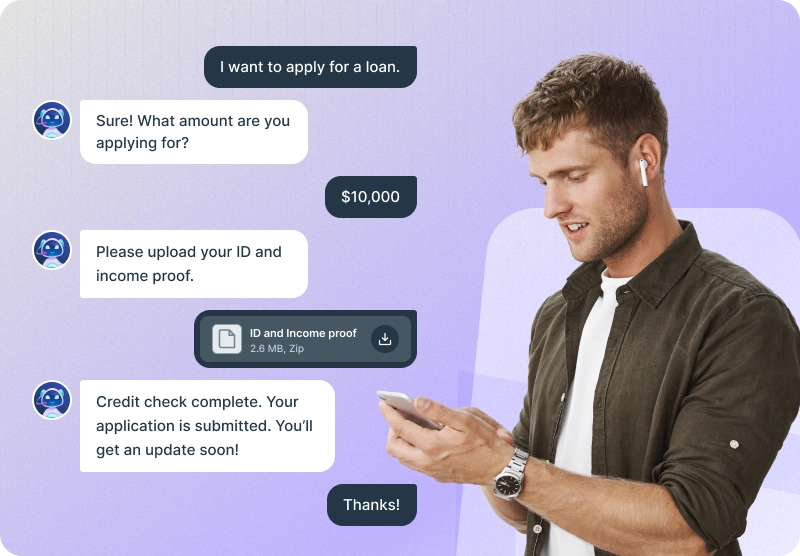

7. Automated Loan Application Assistance

In the BFSI industry, the loan application process is often complex and time-consuming. LLM Chatbot for customer service simplifies this by guiding customers through the application process, answering frequently asked questions, and helping users upload necessary documents. Banks and financial institutions can reduce application processing times and minimize human error by automating loan applications.

Chatbots can also screen initial application criteria, such as credit score checks, allowing financial institutions to prioritize qualified applicants. Research shows that 64% of consumers prefer messaging over calls for customer service. It indicates a demand for digital solutions.

How to Build a LLM Chatbot for Your Business?

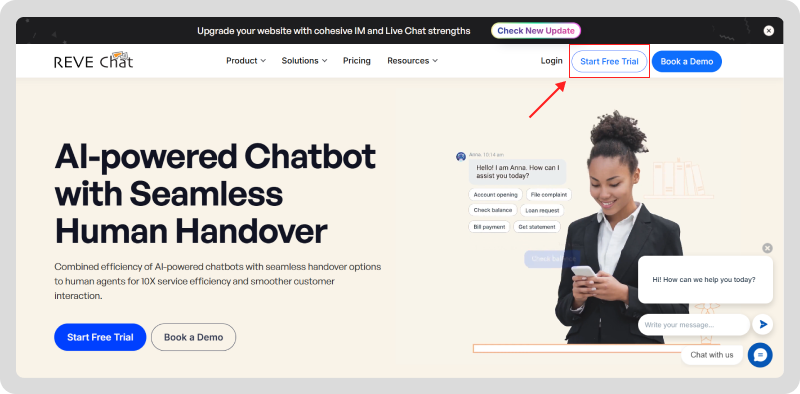

Even though there are many ways to build an LLM chatbot based on the necessities, now I’ll show you how you can build an LLM chatbot using REVE Chat. In this journey, I’ll provide a detailed, step-by-step approach to set up, train, and deploy your chatbot.

So, let’s get started.

Step 1: Log in or Sign Up

Log in to your REVE Chat account. If you don’t have an account, sign up for a 14-day free trial.

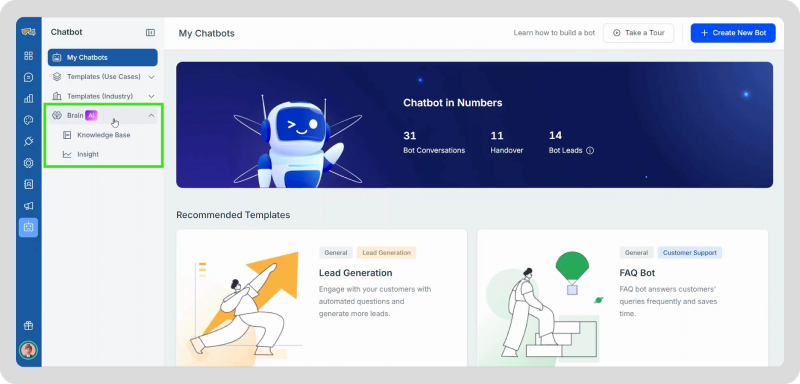

Step 2: Access the Chatbot Module

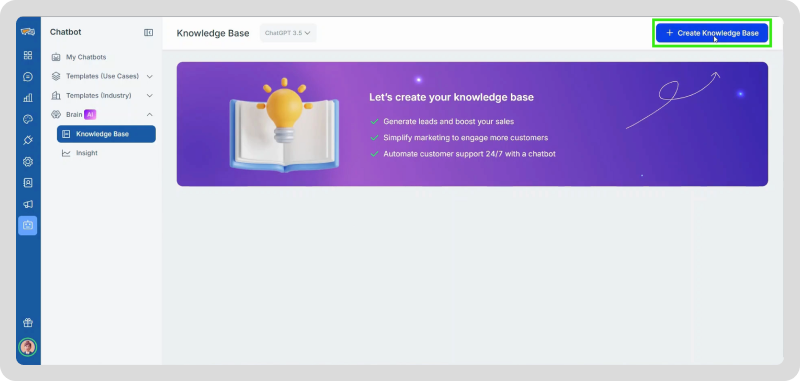

In the left navigation bar, select the Chatbot Module. And, now Click on the New Brain AI tab and choose the Knowledge Based Submenu.

Step 3: Create a Knowledge Base

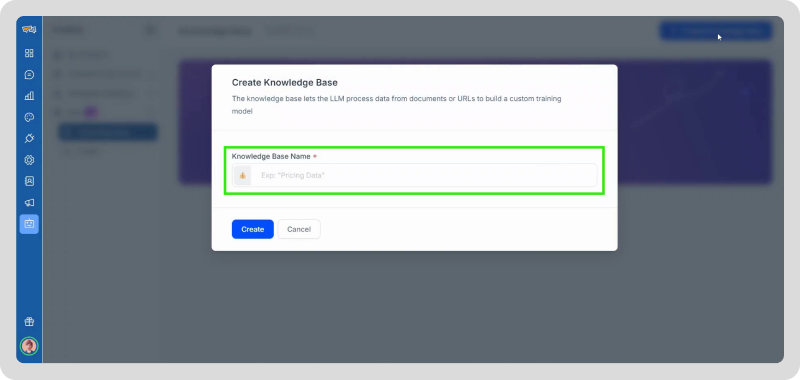

In the Knowledge Base Window, click the Create Button to add a new knowledge base. A pop-up will appear. Enter a name for the Knowledge Base and click Create.

Step 4: Add and Train Data Sources

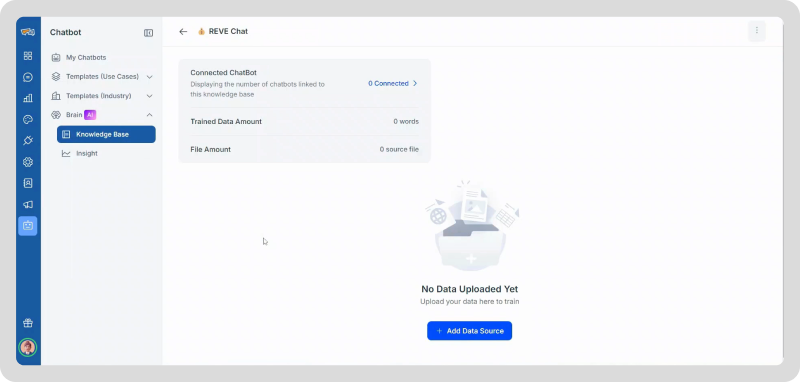

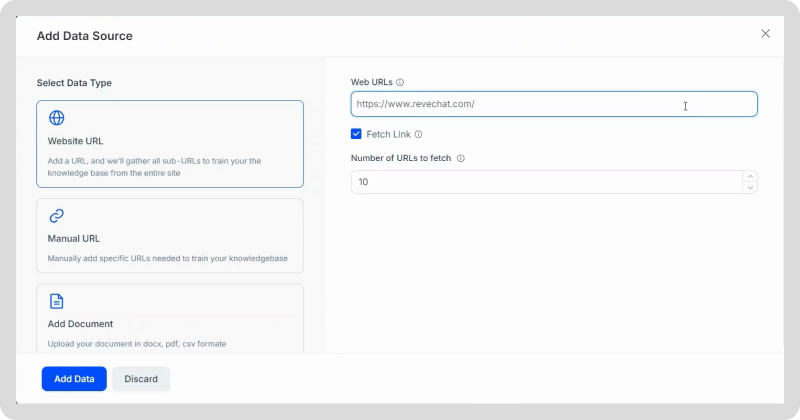

In the Knowledge Base details window, you can add or update data sources. To add a new data source, click the Add Data Source button.

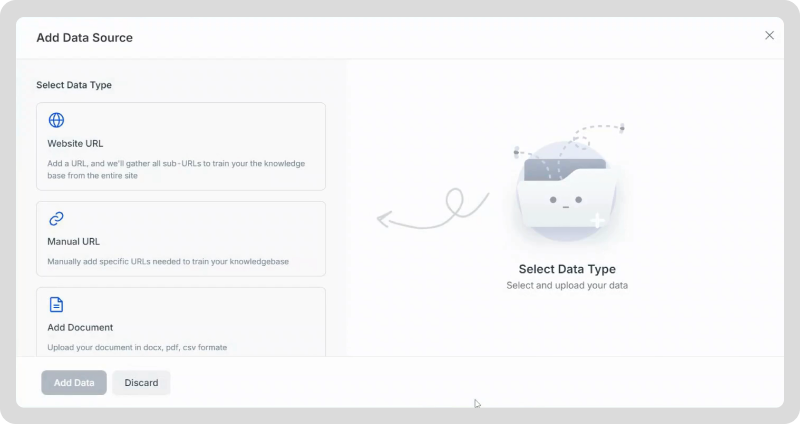

A pop-up will appear where you can add URLs or PDF documents as data sources. If you want to use your company web domain, you can add URLs manually or automatically.

For automatic addition, simply provide the main web domain and enable the fetch link to set the number of subdomains to be added (e.g., 10 domains).

Step 5: Prepare and Upload Data Sources

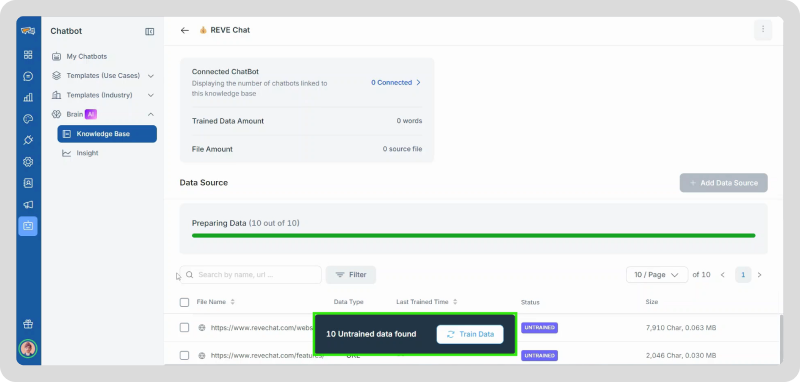

After providing data sources, REVE Chat will prepare the data for upload. The preparation time will vary depending on the data source size.

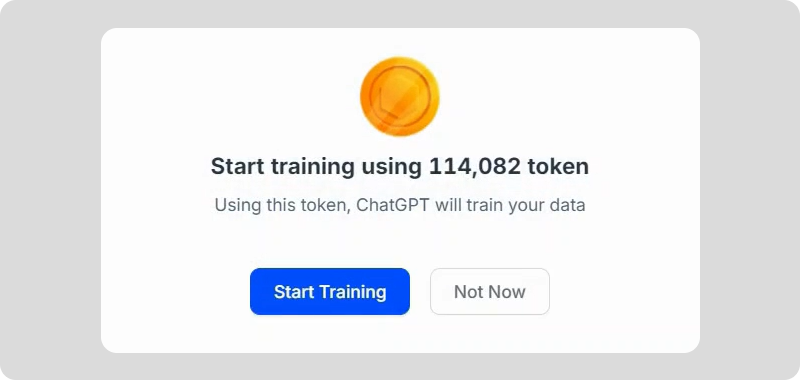

Once uploaded, the data needs to be trained to respond to customer queries. During training, the system crawls the data sources to generate vector responses with the LLM.

While uploading the Data Sources, no LLM tokens are consumed, but during the training face, tokens are consumed to create a repository.

Step 6: Create the LLM Chatbot

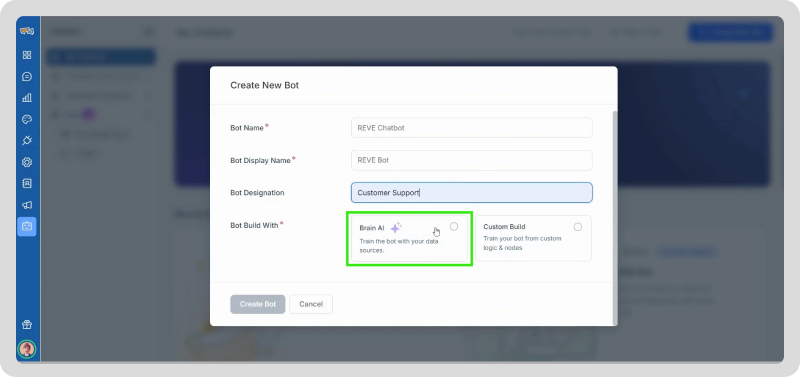

Go to the My Chatbots Tab and click Create New Bot.

In the pop-up, enter the bot name, display name, and designation. Select Brain AI for the bot build option and click the Create Bot Button.

Step 7: Build the Chatbot Logic

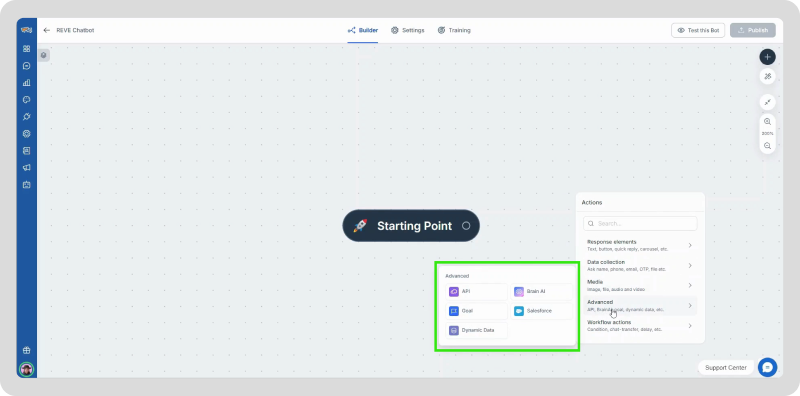

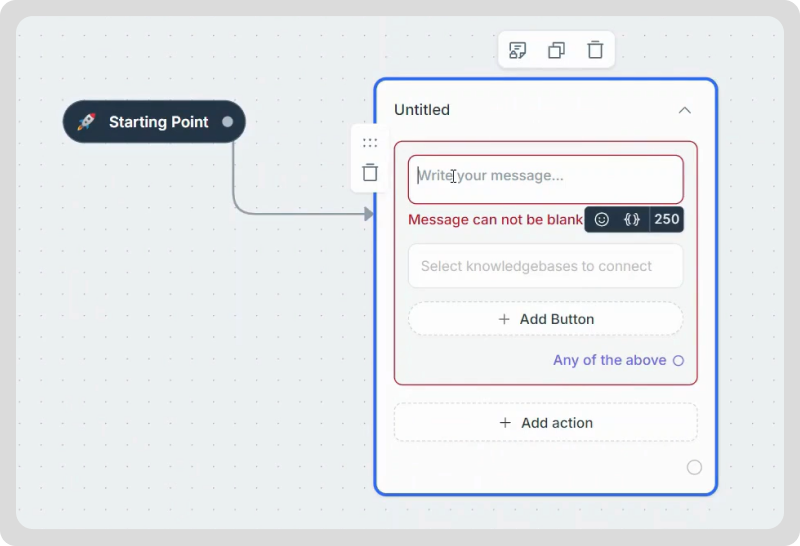

In the Chatbot Builder page, click the connector at the starting point and drag it to an open space, a chatbot action panel will appear.

From the Chatbot Action Panel, navigate to the Advanced Action Submenu and select Brain AI Action.

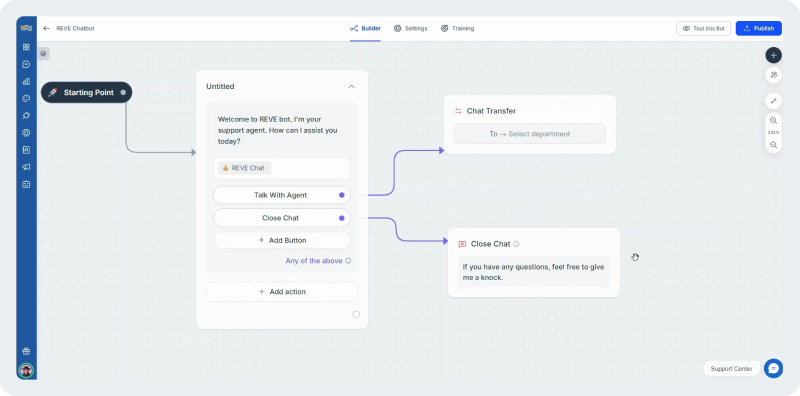

Add a Text Message to be displayed when the bot is triggered and select the Knowledgebase you trained before to respond to visitor queries.

Step 8: Set Response Limits and Add Custom Buttons

Now you can limit the number of queries the chatbot answers before transferring to a live agent, or select Unlimited for continuous responses. Add Custom Buttons to each LLM response to direct the visitor.

For example, add a Talk to Agent Button to transfer the chat to a live agent by adding a Chat Transfer Action. And add a Chat Close Button to allow customers to end the chat when their queries are resolved.

Step 9: Publish and Test the Chatbot

Publish the Chatbot and test it using REVE Chat queries, such as asking about REVE Chat’s Features. For instance, try asking about the Ticketing Feature. The chatbot will take some time to transform the query into a vector pattern and search the knowledge base.

By following these steps, you will have successfully built and deployed a simple LLM Chatbot using Brain AI in REVE Chat, capable of answering visitor queries with relevant, knowledge-based responses.

Future of LLM Chatbots in Business Support

The future of LLM-powered chatbots promises to redefine AI capabilities and reshape customer service and business support. As these models continue to evolve, here are some key advancements that will likely make LLM chatbots even more powerful and versatile:

1. Enhanced Accuracy with Retrieval-Augmented Generation (RAG)

A major leap forward will be in the adoption of Retrieval-Augmented Generation (RAG) technology. RAG combines language models with real-time data retrieval, allowing chatbots to pull in updated, context-specific information from knowledge bases, databases, and even the web.

This makes responses not only more accurate but also dynamic. This allows businesses to provide users with the latest, most relevant information. Just think of a customer support chatbot that can instantly refer to a constantly updated database of product manuals or policy changes, giving highly precise answers tailored to real-time changes.

2. AI Agents for Autonomous Business Functions

We’re also on the horizon of seeing LLM chatbots evolve into full-fledged AI agents, capable of taking on more complex, autonomous tasks. Unlike traditional chatbots that respond based on user prompts, future AI agents could proactively manage tasks, monitor customer accounts, and make recommendations without being prompted.

For instance, an AI agent in a banking context could analyze transaction data to offer proactive financial advice or flag potential fraud. While interacting seamlessly with customers when necessary.

This level of automation could allow businesses to offer a far more personalized, and efficient service without relying heavily on human intervention.

Conclusion

LLM chatbots are transforming the way businesses connect with their customers—offering smarter, faster, and more human-like interactions at scale.

With their ability to handle complex queries, support multiple languages, and streamline operations, they’re not just tools but game-changers in customer engagement and business growth.

Adopting this transformative technology means investing in stronger customer relationships, smarter workflows, and a future where your business thrives.

Frequently Asked Questions

LLM stands for Large Language Model.

An LLM Chatbot is an AI-powered chatbot that uses a large language model to understand and respond to complex user queries naturally.

Yes, an LLM is a type of artificial intelligence designed specifically for language understanding and generation.

GPT (Generative Pre-trained Transformer) is a specific type of LLM developed by OpenAI, focused on text generation and understanding.

LLMs work by processing vast datasets of language to generate human-like responses, relying on deep learning to understand context and generate text.